Updating the application

When new resources are created or updated, application configurations often need to be adjusted to utilize these new resources. In Kubernetes, environment variables are a popular choice for storing configuration, and can be passed to containers through the env field of the container spec when creating deployments.

There are two primary methods to achieve this:

-

Configmaps: These are core Kubernetes resources that allow us to pass configuration elements such as environment variables, text fields, and other items in a key-value format to be used in pod specs.

-

Secrets: These are similar to Configmaps but are intended for sensitive information. It's important to note that Secrets are not encrypted by default in Kubernetes.

The ACK FieldExport custom resource was designed to bridge the gap between managing the control plane of your ACK resources and using the properties of those resources in your application. It configures an ACK controller to export any spec or status field from an ACK resource into a Kubernetes ConfigMap or Secret. These fields are automatically updated when any field value changes, allowing you to mount the ConfigMap or Secret onto your Kubernetes Pods as environment variables.

For this lab, we'll directly update the ConfigMap for the carts component. We'll remove the configuration that points it to the local DynamoDB and use the name of the DynamoDB table created by ACK:

- Kustomize Patch

- ConfigMap/carts

- Diff

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- ../../../../../base-application/carts

patches:

- path: carts-serviceAccount.yaml

configMapGenerator:

- name: carts

namespace: carts

env: config.properties

behavior: replace

options:

disableNameSuffixHash: true

apiVersion: v1

data:

RETAIL_CART_PERSISTENCE_DYNAMODB_TABLE_NAME: ${EKS_CLUSTER_NAME}-carts-ack

RETAIL_CART_PERSISTENCE_PROVIDER: dynamodb

kind: ConfigMap

metadata:

name: carts

namespace: carts

apiVersion: v1

data:

- AWS_ACCESS_KEY_ID: key

- AWS_SECRET_ACCESS_KEY: secret

- RETAIL_CART_PERSISTENCE_DYNAMODB_CREATE_TABLE: "true"

- RETAIL_CART_PERSISTENCE_DYNAMODB_ENDPOINT: http://carts-dynamodb:8000

- RETAIL_CART_PERSISTENCE_DYNAMODB_TABLE_NAME: Items

+ RETAIL_CART_PERSISTENCE_DYNAMODB_TABLE_NAME: ${EKS_CLUSTER_NAME}-carts-ack

RETAIL_CART_PERSISTENCE_PROVIDER: dynamodb

kind: ConfigMap

metadata:

name: carts

We also need to provide the carts Pods with the appropriate IAM permissions to access the DynamoDB service. An IAM role has already been created, and we'll apply this to the carts Pods using IAM Roles for Service Accounts (IRSA):

- Kustomize Patch

- ServiceAccount/carts

- Diff

apiVersion: v1

kind: ServiceAccount

metadata:

name: carts

namespace: carts

annotations:

eks.amazonaws.com/role-arn: ${CARTS_IAM_ROLE}

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

eks.amazonaws.com/role-arn: ${CARTS_IAM_ROLE}

name: carts

namespace: carts

apiVersion: v1

kind: ServiceAccount

metadata:

+ annotations:

+ eks.amazonaws.com/role-arn: ${CARTS_IAM_ROLE}

name: carts

namespace: carts

To learn more about how IRSA works, see here.

Let's apply this new configuration:

Now we need to restart the carts Pods to pick up our new ConfigMap contents:

deployment.apps/carts restarted

Waiting for deployment "carts" rollout to finish: 1 old replicas are pending termination...

deployment "carts" successfully rolled out

To verify that the application is working with the new DynamoDB table, we can interact with it through a browser. An NLB has been created to expose the sample application for testing:

http://k8s-ui-uinlb-647e781087-6717c5049aa96bd9.elb.us-west-2.amazonaws.com

Please note that the actual endpoint will be different when you run this command as a new Network Load Balancer endpoint will be provisioned.

To wait until the load balancer has finished provisioning, you can run this command:

Once the load balancer is provisioned, you can access it by pasting the URL in your web browser. You'll see the UI from the web store displayed and will be able to navigate around the site as a user.

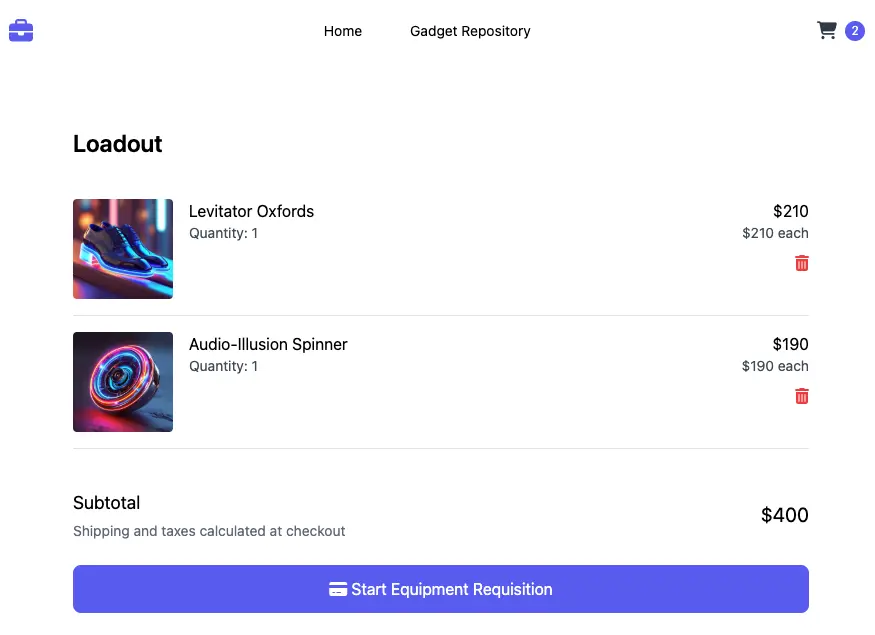

To verify that the Carts module is indeed using the DynamoDB table we just provisioned, try adding a few items to the cart.

To confirm that these items are also in the DynamoDB table, run:

Congratulations! You've successfully created AWS Resources without leaving the Kubernetes API!