StatefulSet with EBS Volume

Now that we understand StatefulSets and Dynamic Volume Provisioning, let's change our MySQL DB on the Catalog microservice to provision a new EBS volume to store database files persistent.

Utilizing Kustomize, we'll do two things:

- Create a new StatefulSet for the MySQL database used by the catalog component which uses an EBS volume

- Update the

catalogcomponent to use this new version of the database

Why are we not updating the existing StatefulSet? The fields we need to update are immutable and cannot be changed.

Here in the new catalog database StatefulSet:

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: catalog-mysql-ebs

namespace: catalog

labels:

app.kubernetes.io/created-by: eks-workshop

app.kubernetes.io/team: database

spec:

replicas: 1

serviceName: catalog-mysql-ebs

selector:

matchLabels:

app.kubernetes.io/name: catalog

app.kubernetes.io/instance: catalog

app.kubernetes.io/component: mysql-ebs

template:

metadata:

labels:

app.kubernetes.io/name: catalog

app.kubernetes.io/instance: catalog

app.kubernetes.io/component: mysql-ebs

app.kubernetes.io/created-by: eks-workshop

app.kubernetes.io/team: database

spec:

containers:

- name: mysql

image: "public.ecr.aws/docker/library/mysql:8.0"

imagePullPolicy: IfNotPresent

env:

- name: MYSQL_ROOT_PASSWORD

value: my-secret-pw

- name: MYSQL_DATABASE

value: catalog

- name: MYSQL_USER

valueFrom:

secretKeyRef:

name: catalog-db

key: RETAIL_CATALOG_PERSISTENCE_USER

- name: MYSQL_PASSWORD

valueFrom:

secretKeyRef:

name: catalog-db

key: RETAIL_CATALOG_PERSISTENCE_PASSWORD

volumeMounts:

- name: data

mountPath: /var/lib/mysql

ports:

- name: mysql

containerPort: 3306

protocol: TCP

volumes:

- name: data

emptyDir: {}

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: ebs-csi-default-sc

resources:

requests:

storage: 30Gi

The volumeClaimTemplates field instructs Kubernetes to utilize Dynamic Volume Provisioning to create a new EBS Volume, a PersistentVolume (PV) and a PersistentVolumeClaim (PVC) all automatically.

Specify the storageClassName as ebs-csi-default-sc which is the name of the default storage class

We are requesting a 30GB EBS volume

This is how we'll re-configure the catalog component itself to use the new StatefulSet:

- Kustomize Patch

- ConfigMap/catalog

- Diff

apiVersion: v1

kind: ConfigMap

metadata:

name: catalog

data:

RETAIL_CATALOG_PERSISTENCE_ENDPOINT: catalog-mysql-ebs:3306

apiVersion: v1

data:

RETAIL_CATALOG_PERSISTENCE_DB_NAME: catalog

RETAIL_CATALOG_PERSISTENCE_ENDPOINT: catalog-mysql-ebs:3306

RETAIL_CATALOG_PERSISTENCE_PROVIDER: mysql

kind: ConfigMap

metadata:

name: catalog

namespace: catalog

apiVersion: v1

data:

RETAIL_CATALOG_PERSISTENCE_DB_NAME: catalog

- RETAIL_CATALOG_PERSISTENCE_ENDPOINT: catalog-mysql:3306

+ RETAIL_CATALOG_PERSISTENCE_ENDPOINT: catalog-mysql-ebs:3306

RETAIL_CATALOG_PERSISTENCE_PROVIDER: mysql

kind: ConfigMap

metadata:

name: catalog

Apply the changes and wait for the new Pods to be rolled out:

Let's now confirm that our newly deployed StatefulSet is running:

NAME READY AGE

catalog-mysql-ebs 1/1 79s

Inspecting our catalog-mysql-ebs StatefulSet, we can see that now we have a PersistentVolumeClaim attached to it with 30GiB and with storageClassName of ebs-csi-driver.

[

{"apiVersion": "v1",

"kind": "PersistentVolumeClaim",

"metadata": {"creationTimestamp": null,

"name": "data"

},

"spec": {"accessModes": [

"ReadWriteOnce"

],

"resources": { "requests": {"storage": "30Gi"

}

},

"storageClassName": "ebs-csi-default-sc",

"volumeMode": "Filesystem"

},

"status": {"phase": "Pending"

}

}

]

We can analyze how the Dynamic Volume Provisioning created a PersistentVolume (PV) automatically for us:

pvc-1df77afa-10c8-4296-aa3e-cf2aabd93365 30Gi RWO Delete Bound catalog/data-catalog-mysql-ebs-0 gp2 10m

Utilizing the AWS CLI, we can check the Amazon EBS volume that got created automatically for us:

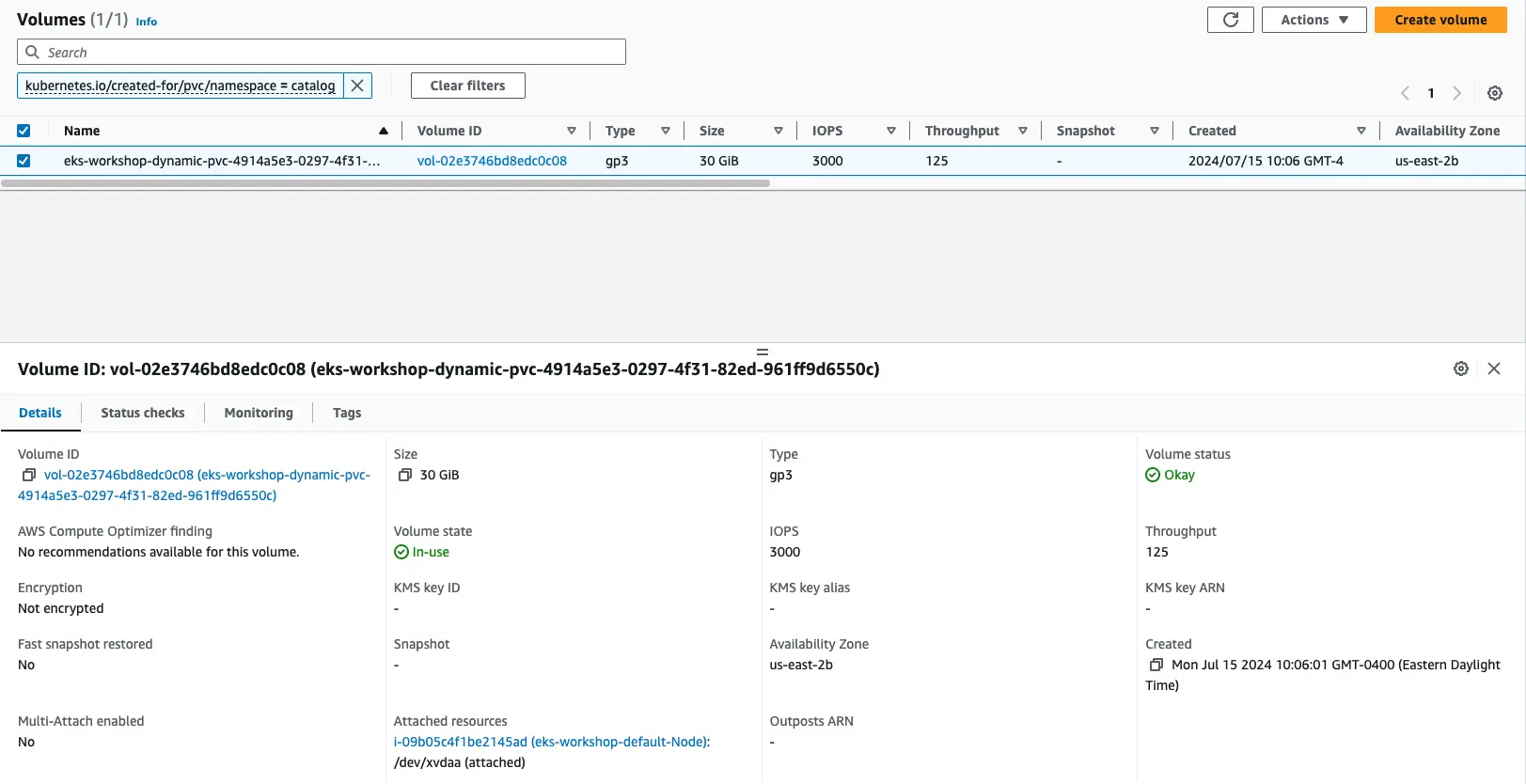

If you prefer you can also check it via the AWS console, just look for the EBS volumes with the tag of key kubernetes.io/created-for/pvc/name and value of data-catalog-mysql-ebs-0:

If you'd like to inspect the container shell and check out the newly EBS volume attached to the Linux OS, run this instructions to run a shell command into the catalog-mysql-ebs container. It'll inspect the file-systems that you have mounted:

Filesystem Size Used Avail Use% Mounted on

overlay 100G 7.6G 93G 8% /

tmpfs 64M 0 64M 0% /dev

tmpfs 3.8G 0 3.8G 0% /sys/fs/cgroup

/dev/nvme0n1p1 100G 7.6G 93G 8% /etc/hosts

shm 64M 0 64M 0% /dev/shm

/dev/nvme1n1 30G 211M 30G 1% /var/lib/mysql

tmpfs 7.0G 12K 7.0G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 3.8G 0 3.8G 0% /proc/acpi

tmpfs 3.8G 0 3.8G 0% /sys/firmware

Check the disk that is currently being mounted on the /var/lib/mysql. This is the EBS Volume for the stateful MySQL database files that being stored in a persistent way.

Let's now test if our data is in fact persistent. We'll create the same test.txt file exactly the same way as we did on the first section of this module:

Now, let's verify that our test.txt file got created on the /var/lib/mysql directory:

-rw-r--r-- 1 root root 4 Oct 18 13:57 test.txt

Now, let's remove the current catalog-mysql-ebs Pod, which will force the StatefulSet controller to automatically re-create it:

pod "catalog-mysql-ebs-0" deleted

Wait for a few seconds, and run the command below to check if the catalog-mysql-ebs Pod has been re-created:

pod/catalog-mysql-ebs-0 condition met

NAME READY STATUS RESTARTS AGE

catalog-mysql-ebs-0 1/1 Running 0 29s

Finally, let's exec back into the MySQL container shell and run a ls command on the /var/lib/mysql path trying to look for the test.txt file that we created, and see if the file has now persisted:

-rw-r--r-- 1 mysql root 4 Oct 18 13:57 test.txt

123

As you can see the test.txt file is still available after a Pod delete and restart and with the right text on it 123. This is the main functionality of Persistent Volumes (PVs). Amazon EBS is storing the data and keeping our data safe and available within an AWS availability zone.